Facebook’s Stricter Measures Against Misinformation

Facebook has announced its intention to take stronger action against users who frequently spread misinformation and fake news. The social media giant, headquartered in the United States, plans to implement penalties to address offenders by imposing restrictions on their accounts.

Addressing the Misinformation Challenge

The proliferation of misinformation on social media platforms has become a significant concern, prompting companies to take proactive measures to combat its dissemination. Facebook, in particular, has intensified its efforts to address misinformation surrounding topics such as COVID-19, vaccines, climate change, and elections.

In a blog post, Facebook stated, “Whether it’s false or misleading content about COVID-19 and vaccines, climate change, elections, or other topics, we’re making sure fewer people see misinformation on our apps.”

Implementation of Penalties

As part of its strategy, Facebook announced that it would reduce the distribution of posts in the News Feed from individual accounts that repeatedly share content flagged by the platform’s fact-checking partners. This means that posts shared by users with a history of spreading misinformation will have limited reach on other users’ News Feeds.

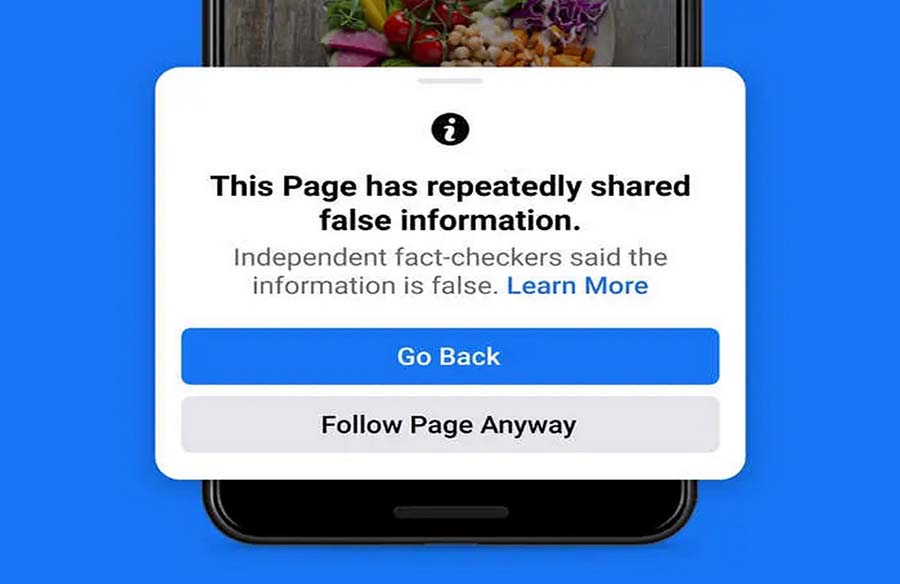

The social media company is also rolling out an enhancement alert tool designed to notify users when they interact with content that has been flagged by fact-checking partners. This update aims to empower users to identify potentially unreliable information more easily. Additionally, Facebook intends to provide informative pop-up messages to users before they engage with Pages that have a history of sharing fact-checked content.

Informing Users and Correcting Misinformation

Facebook’s notification system will include fact-checkers’ articles debunking false claims made in other articles. Users will receive prompts to share the correct information with their connections. Moreover, individuals who share false information may receive notifications warning them that their posts could be ranked lower in the News Feed to reduce their visibility to a broader audience.

Facebook’s proactive approach underscores its commitment to combating misinformation and promoting the dissemination of accurate information on its platform. These measures aim to enhance the overall quality of content and foster a safer and more reliable online environment for users.